Throughout history, the introduction of new technology tends to trigger a race between the forces of democratization and those of autocracy.

The radio, film, the internet, social media — all of them saw a scramble between people seeking to use the new technology to consolidate top-down power and those who would use technology to democratize.

Something similar is happening right now with artificial intelligence.

AI’s rapid advancement and growing prevalence (and the massive financial investment behind it) are making the technology an increasingly appealing target for authoritarian capture. Already, autocratic actors are deploying AI to enhance the tactics in their playbook. But so too are some democracy proponents starting to adopt and adapt to the new technology (more on how below).

We don’t yet know how this contest will shake out. As Dario Amodei, co-founder of Anthropic, has written:

Human conflict is adversarial and AI can in principle help both the “good guys” and the “bad guys”. If anything, some structural factors seem worrying: AI seems likely to enable much better propaganda and surveillance, both major tools in the autocrat’s toolkit. It’s therefore up to us as individual actors to tilt things in the right direction: if we want AI to favor democracy and individual rights, we are going to have to fight for that outcome.

How can those of us committed to protecting democracy — AI skeptics and boosters alike — work to ensure that the technology does not become predominantly a tool of autocracy?

The lesson from past technological races is clear: It is not enough to simply use technology for good, nor is it adequate to focus exclusively on regulating technology at the expense of its responsible uses. Instead, we must embrace a dual mandate — defending against autocratic applications of AI while harnessing its capabilities for democracy.

We must quickly put in place the guardrails to check AI’s abuses and anticipate its downstream harms — all the while experimenting and putting it to work for civic good.

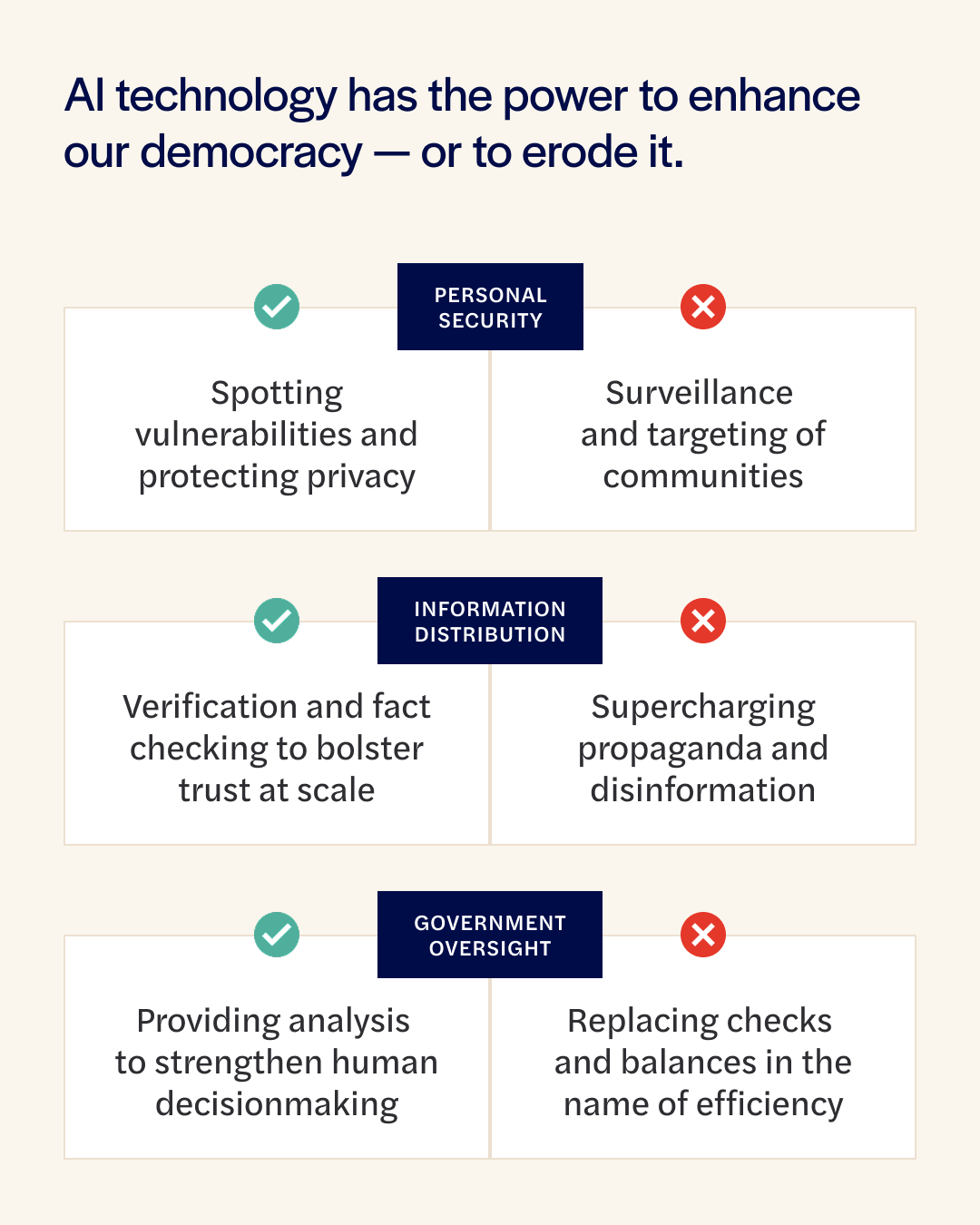

We don’t know exactly how AI will impact democracy and authoritarianism. It seems, however, that AI will work through three main pathways, for good or ill: One, surveillance and privacy. Two, propaganda and trust. And three, replacing or bolstering checks-and-balances.

On each of these three dimensions, we should seek to both guard against the harms while seeking to strengthen the benefits.

Surveillance and privacy

AI is already capable of digesting enormous amounts of information almost simultaneously. This can be easily and profoundly abused by autocrats, whether through surveillance at scale via tools like facial recognition technology, or through targeting specific communities and their communications.

At the same time, if deployed correctly, AI can be used as a tool to help protect privacy and security. It can help spot vulnerabilities, monitor threats, and protect critical systems both online and off.

How AI is strengthening surveillance techniques: China is likely the most sophisticated country when it comes to deploying AI tools for surveillance (including tools based on American AI models). That said, we’re already seeing examples of AI surveillance by the Trump administration. The State Department is reportedly using AI tools to review the social media presence of foreign student visa holders. DOGE has used AI to monitor the communications of civil servants, scanning for language deemed “hostile” to the administration. Expect attempts at this sort of AI-powered surveillance to significantly increase in the years ahead.

How AI is protecting privacy: While less notable than surveillance, there are examples where AI is being used as a safeguard — of privacy, of sensitive information, and of critical systems. Microsoft’s Azure AI Language Personally Identifiable Information detection uses machine learning to identify and redact sensitive information from inputted data, including across text and audio formats. Protect Democracy’s own VoteShield platform uses machine learning tools to surface threats to voter information by identifying unusual changes that would not be apparent without these algorithms’ ability to spot subtle patterns. These examples are not answers to essential questions around how AI should be designed, trained, and deployed to safeguard privacy rights, nor do they address how AI is increasing the urgency of long-needed updates to U.S. data privacy laws. But they do offer an example of AI applications that merit exploring alongside tackling these critical governance needs.

Propaganda and trust

In the wrong hands, AI tools can supercharge propaganda and disinformation efforts. Already, generative AI is capable of producing high-quality synthetic content across formats (text, video, image, and audio) that is increasingly indistinguishable from human-generated content. Authoritarian actors (and AI slop manufacturers) have already discovered AI’s utility for flooding the zone with high volumes of cheaply created synthetic content. AI-fueled propaganda efforts threaten to swamp our information ecosystem with disinformation, deepfakes, and simple noise.

In parallel, AI, like other advanced technology before it, is changing where and how we get our information — from AI-generated summaries at the top of search results to generative AI chatbots. This has already raised real questions around their reliability as sources for accurate information, particularly given risks of hallucination. But it also makes AI the latest target for authoritarian efforts to censor and control information.

Together these point to perhaps the biggest threat that AI’s misuse poses to democracy — simply eroding, either deliberately or accidentally, the foundation of trust and shared reality necessary for democracy to work.

At the same time, there are still ways that AI can be used to bolster — not undermine — our information environment and trust. In fact-checkers’ hands, it can be used to speed the verification of information and scale the number of claims they review. For trust and safety workers, it is a tool for supercharging their ability to analyze and moderate the flood of content on modern social media and messaging platforms. And AI’s pattern detection capabilities have already made it an important tool for analyzing public input and identifying areas of common ground.

How AI is turbocharging propaganda: Political actors are already sharing deepfakes and AI content to try to erode democracy. Last year, as The Washington Post reported, a former deputy Palm Beach County sheriff who fled to Moscow worked closely with Russian military intelligence “to pump out deepfakes and circulate misinformation [targeting] Vice President Kamala Harris’s campaign.” In 2023, deepfakes may have influenced the Slovakian election. AI-generated images have become a mainstay of both President Trump’s social media and official administration accounts. At the same time, as generative AI chatbots have become prevalent, authoritarians have moved quickly to account for them in their pursuit of information censorship. The Chinese government requires Chinese consumer-facing generative AI products to operationalize strict content controls and ensure the “truth, accuracy, objectivity, and diversity” of training data as defined by the Chinese Communist Party. The result is evident in chatbots’ refusal to engage with topics like Tiananmen Square and erasing mentions of the word “Tibet.” Here at home, the Trump administration’s AI Action Plan and executive order on “Preventing Woke AI in the Federal Government” demand that the U.S. government only contract with large language model (LLM) developers who ensure their systems are free from “ideological bias.” While the administration may view this as a corrective to what some on the American right criticize as liberal biases built into AI systems, it is also a capacious term that, when coupled with the administration’s generally censorious posture, may already be driving platforms to make content decisions they think will be viewed favorably by the administration. And that’s to say nothing of how AI tools may replicate human biases even absent a government thumb on the scale.

How AI is helping bolster trust: A number of new tools have been developed that use AI to check facts and protect the information environment. Meedan’s Check seeks to help journalists and human rights groups exchange information directly with audiences via direct messaging apps. It allows trusted outlets to create tip lines that both gather information on the ground and immediately answer questions from readers based on their materials. (In short, instead of using AI to displace journalists, Check uses it to give them new tools to make their reporting accessible to readers.) Meanwhile, Thorn’s Safer uses AI to monitor and detect child sexual assault material online. Alongside these information ecosystem-focused tools are a growing number of examples of AI being deployed to facilitate and analyze public dialogue. Pol.is is an open-source tool that uses AI to identify consensus across diverse — even polarized — groups and remains a key component of Taiwan’s ongoing efforts to engage citizens in national policymaking. Even more recently, peace-building organization BuildUp is leveraging AI-automated WhatsApp conversations to foster large-scale, inclusive dialogue in conflict areas like Sudan.

Replacing or bolstering checks-and-balances

Finally, AI in the wrong hands has the potential to consolidate power in government or hierarchies if used to justify eliminating checks-and-balances and the people who power them. Right now, if a president or government leader wants to undertake a repressive program, it is not as simple as issuing a single directive. Instead, they still must contend with procedural guardrails designed to check unilateral authority and offer transparency into decision making — and compel a long chain of people to carry out that agenda. While far from a fail-safe, this provides a certain amount of resistance to harmful actions or decisions. Imagine, however, if these guardrails and their human decisionmakers are swept away as “inefficient” under the pretense that AI can effectively replace them.

Conversely, in the best uses, AI can be deployed not to eliminate checks-and-balances and human decision making, but rather to strengthen them — equipping people with better tools to digest and analyze information and render careful judgment. Not only does that bolster democracy by effectively strengthening institutional resilience to top-down coercion, but it could actually facilitate efficiency gains and more informed decisions.

How AI is replacing checks-and-balances: From 2015 to 2018, millions of voters in India may have been disenfranchised after their names were automatically deleted from the rolls by an algorithm designed to identify ineligible voters by integrating the country’s biometric databases and voter rolls. Justified by claims of addressing inefficiencies and strengthening democracy, this effort had no shortage of failings, and key amongst them was the lack of transparency around the AI-enabled software employed and the decisions it made. Today, the Trump administration is reportedly using AI to sift through immigration data and identify potential targets in the pursuit of what ICE Director Todd Lyons has characterized as “like [Amazon] Prime, but for human beings” for immigration enforcement. Here too AI is being deployed under the guise of efficiency, but along the way bypassing guardrails and obfuscating underlying decisionmaking.

How AI is bolstering human decision-makers: In Virginia, a local elections official is using AI to better predict voter turnout, which allows election resources to be deployed more effectively. Similarly, in Washington, D.C. local government officials developed an AI chatbot that helps field questions from staff about policies, procedures, and system functionality in welfare case management. Uses of AI like these aren’t reducing checkpoints or taking humans out of the decision; instead they do the opposite. They bolster these decision points by making key information more available, digestible, and operable. This makes those systems less vulnerable to top-down abuses.

Introducing the AI for Democracy Action Lab

All of this is why Protect Democracy is creating the AI for Democracy Action Lab (AI-DAL).

If we want AI to bolster democracy — and we don’t want it to empower authoritarianism — we need a two pronged strategy. We must both accelerate democracy-enhancing uses of the technology while mitigating its abuse through regulation and other guardrails.

These strategies need to inform one another. They need to be two parts of the same program pursued together. That way, over time, we can push AI design choices to incentivize democracy-enhancing uses and cut off the most dangerous autocracy-enabling ones.

The AI for Democracy Action Lab is a place to join together with tech innovators, legal and policy experts, software engineers, nonprofit leaders, and others to not only defend against AI’s dangers but seize its possibilities for strengthening self-government. This builds on our experience integrating a variety of tools from product development to policy.

This lab will have two mutually-enforcing goals:

One: Incubating and accelerating development of AI-enabled civic tools. We will partner with organizations that have been pioneering AI’s pro-democracy applications — including key allies in state and local governments and other civil society organizations — to identify the strongest use cases for AI to advance democracy and fast-track responsive product development. Our lab will organize a learning community that shares expertise, best practices, and learnings from success and failure alike so that innovations can be replicated and scaled responsibly.

Two: Developing policy and governance solutions that check AI-enhanced threats to democracy. We will serve as a trusted, nonpartisan resource for platforms and regulators grappling with how to govern these systems in the public interest. The focus will be especially on how constitutional principles — checks-and-balances, individual freedoms, and the right to privacy — can be embedded in forward-looking data and tech governance regimes.

We should be clear that we don’t see this lab as a prediction of whether or not we can plausibly succeed. We do not know whether the potential harms of AI can be mitigated or if positive, pro-democracy uses of the technology can truly work at scale. In the long term, we do not know if democracy or autocracy will benefit from AI, nor do we know for certain the myriad ways the technology could benefit or impair humanity more broadly. (Indeed, past predictions of how technology will influence society tend to be wildly off-base.)

It may be that the most alarming warnings about AI come true. Or that the AI race ends up being less significant than many of us hope or fear — that both the potential for weaponization and the positive uses both end up muted in comparison to other revolutionary new technologies in the past. We don’t know.

Rather, we are creating this lab on the basis of that humility. We do not know how the race will end.

Simply, we recognize we are all already in the race — whether we like it or not.

Major development in 2,000 Mules case

Here’s Protect Democracy’s Jane Bentrott with an important update:

This week, a federal judge ruled that the creators of 2000 Mules “never had any evidence” for their false claims accusing our client of being a criminal “ballot mule.” The case will proceed to trial on defamation and Ku Klux Klan Act claims, with the court finding that a jury could conclude defendants conspired not just to produce and promote the film, “but to do so for the purpose of advancing a knowingly false narrative about criminal ballot mules that intentionally defamed Andrews ‘on account of’” his lawful use of a ballot dropbox.

As Trump reiterates his desire to ban “corrupt” mail-in voting, this case continues to make clear: There are limits, and consequences, for reckless lies targeting private citizens who lawfully exercise their right to vote.

Read more about the case here.

What else we’re tracking:

The government is still shut down. Read Cerin Lindregsavage’s piece on how Russ Vought seizing control of the power of the purse by impounding funds explains the shutdown: Who broke the appropriations process? (Or, watch her talk about it here.)

Here’s a great explainer in The New York Times on the administration’s radical spending moves: ‘Pocket recissions’ sound obscure, but they’re a big deal.

In the shutdown, the administration has openly and broadly violated the Hatch Act, which bars federal employees from engaging in political activity, by posting political messages on official government websites blaming Democrats for the shutdown.

The administration’s latest attack on universities? Coercing them into signing ideological pledges in exchange for funding preference. (Read more: Universities have no choice.)

Trump may be undermining his own authoritarian project — at least when it comes to prosecuting his enemies. Asawin Suebsaeng and Andrew Perez explain in Zeteo.

Protect Democracy board member Kori Schake, writing in Foreign Policy: Trump’s speech to generals was incitement to violence against Americans.

Read our new report on how fusion voting can be a pathway to proportional representation. Or listen to Jennifer Dresden, one of the authors of the report, on the This Old Democracy podcast.

More than 1,000 DOJ alumni — whose service spans from the administrations of Presidents Eisenhower to Trump — have signed a letter condemning the indictment of former FBI Director James Comey, stating that the “indictment represents an unprecedented assault on the rule of law directed by President Donald Trump.”

You have missed an important piece in the AI debate. That is the cost in terms of energy and water consumption that AI requires, along with the very real decisions made by very real plutocrats about who suffers the side effects of pollution generated by the power creation and deficit of potable water. AI is indeed "artificial" in the sense of created by humans, but very much NOT "intelligent" in the sense of being able to make consistent and reasonable decisions based on any conceptual framework except that built in by the creators of the particular AI entity. All AI can be "socialized" to transmit the stance of its creators without revealing that its' outputs are determined by that stance.

I commend the effort, but ultimately, the best way to protect against authoritarian capture of AI is to not elect authoritarians and putting them in charge.

This will ultimately require a change of consciousness and ethics in the electorate and frankly in political and other institutional leadership.

AI is but the latest technology in this period of Technosphere.

https://medium.com/@jylterps/the-ethics-of-the-technosphere-big-data-artificial-intelligence-surveillance-capitalism-and-the-2acadbd2fb9b